- on: June 26, 2025

- in: Nature

- ✨Nature Journal Article

Controlling diverse robots by inferring Jacobian fields with deep networks

@inproceedings{lesterli2025unifyingrepresentationcontrol,

title = { Controlling diverse robots by inferring Jacobian fields with deep networks },

author = { Li, Sizhe and

Zhang, Annan and

Chen, Boyuan and

Matusik, Hanna and

Liu, Chao and

Rus, Daniela and

Sitzmann, Vincent },

year = { 2025 },

booktitle = { Nature },

}TL;DR: We introduce Neural Jacobian Fields for closed-loop robot control from vision. We learn both the robot’s 3D morphology and how its 3D points move under any command by observing the robot execute random actions from multi-view video. We show control of both bio-inspired soft/multi-material and conventional piecewise rigid robots using just a single video camera. Please see the video for a short summary and results!

Abstract

Mirroring the complex structures and diverse functions of natural organisms is a long-standing challenge in robotics. Modern fabrication techniques have dramatically expanded feasible hardware, yet deploying these systems requires control software to translate desired motions into actuator commands. While conventional robots can easily be modeled as rigid links connected via joints, it remains an open challenge to model and control bio-inspired robots that are often multi-material or soft, lack sensing capabilities, and may change their material properties with use. Here, we introduce Neural Jacobian Fields, an architecture that autonomously learns to model and control robots from vision alone. Our approach makes no assumptions about the robot’s materials, actuation, or sensing, requires only a single camera for control, and learns to control the robot without expert intervention by observing the execution of random commands. We demonstrate our method on a diverse set of robot manipulators, varying in actuation, materials, fabrication, and cost. Our approach achieves accurate closed-loop control and recovers the causal dynamic structure of each robot. By enabling robot control with a generic camera as the only sensor, we anticipate our work will dramatically broaden the design space of robotic systems and serve as a starting point for lowering the barrier to robotic automation.

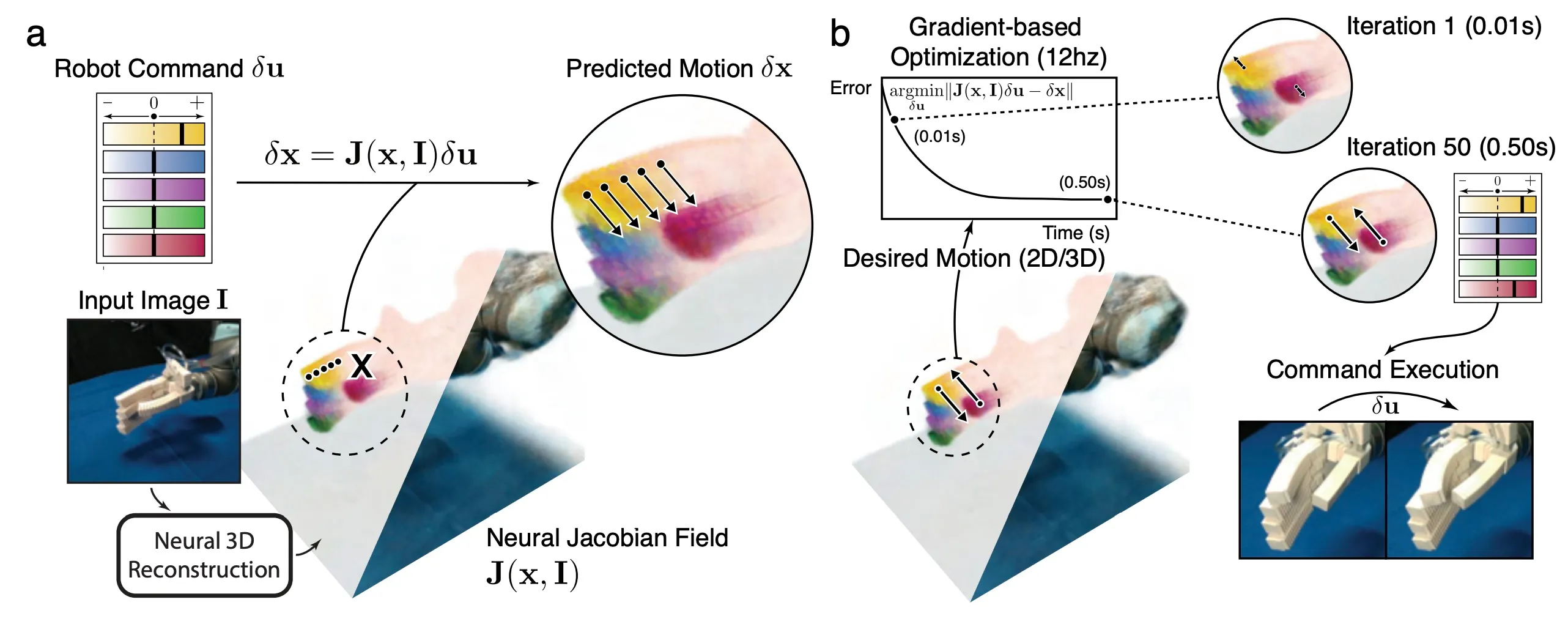

Controlling robots from vision via the Neural Jacobian Field

(a) Reconstruction of the Neural Jacobian Field and motion prediction. From a single image, a machine learning model infers a 3D representation of the robot in the scene, the Neural Jacobian Field. It encodes the robot’s geometry and kinematics, allowing us to predict the 3D motions of robot surface points under all possible commands. Coloration indicates the sensitivity of that point to individual command channels. (b) Closed-loop control from vision. Given desired motion trajectories in pixel space or in 3D, we use the Neural Jacobian Field to optimize for the robot command that would generate the prescribed motion at an interactive speed of approximately 12 Hz. Executing the robot command in the real world confirms that the desired motions are achieved.

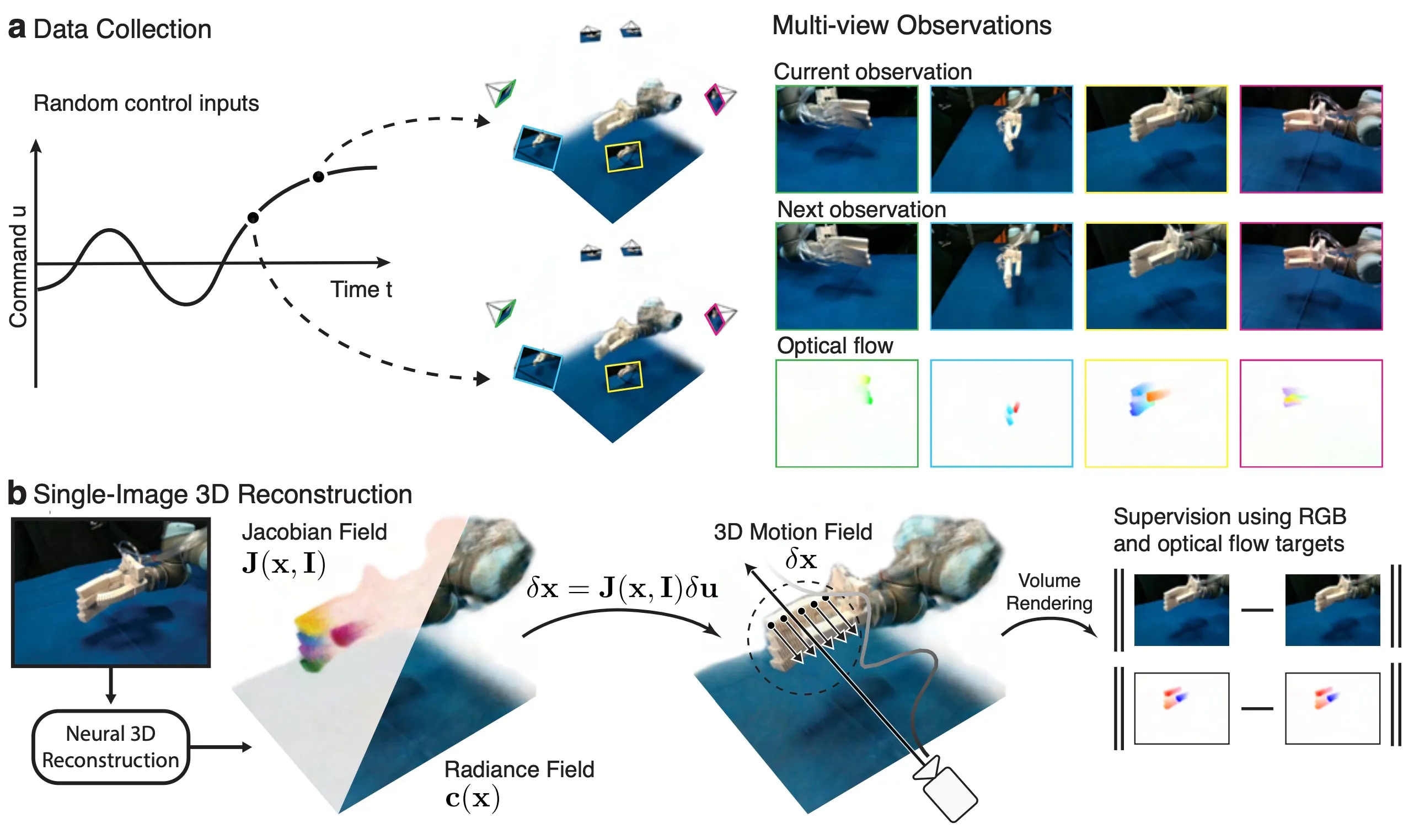

Training

Our model only requires multi-view video of the robot executing random actions to learn both its 3D morphology as well as its control via the Neural Jacobian Field. Figure above: (a) Our data collection process samples random control commands to be executed on the robot. Using a setup of 12 RGB-D cameras, we record multi-view captures before each command is executed, and after each command has settled to the steady state. (b) our method first conducts neural 3D reconstruction that takes a single RGB image observation as input and outputs the Jacobian field and Radiance field. Given a robot command, we compute the 3D motion field using the Jacobian field. Our framework can be trained with full self-supervision by rendering the motion field into optical flow images and the radiance field into RGB-D images.

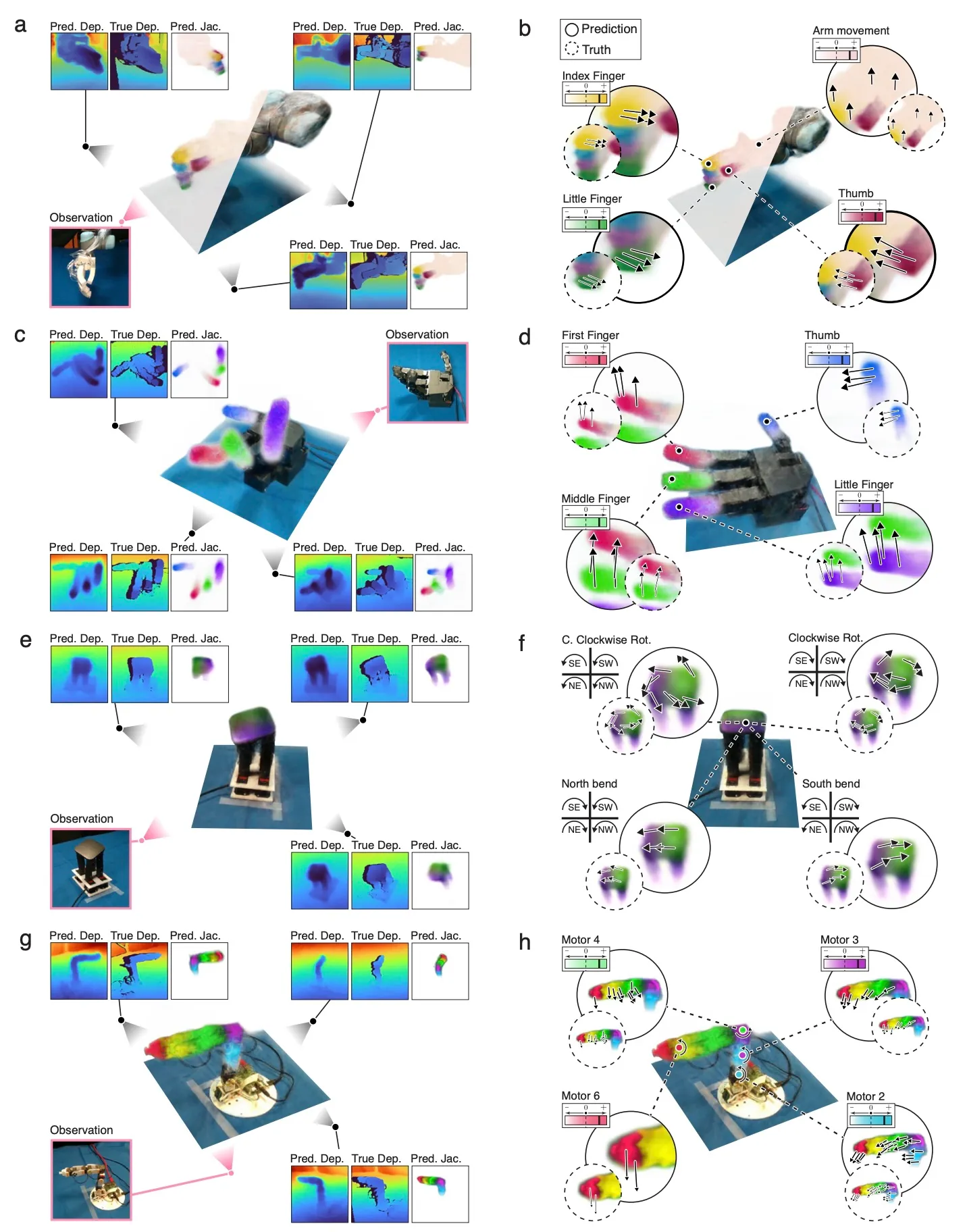

Reconstructing and Controlling Diverse Robots in Real-Time from Only a Single Camera Video Stream

We show that we can reconstruct both conventional and soft and multi-material robots with the same exact approach, without any robot-specific manual effort. Figure above: (a, c, e, g), Visualization of the reconstructed Jacobian and Radiance Fields (center) and comparison of reconstructed and measured geometry (sides) from a single input image. Colorization indicates the motion sensitivity of the 3D point to different actuator command channels, meaning that our system successfully learns correspondence between robot 3D parts and command channels without human annotations. We show depth predictions next to measurements of RGB-D cameras, demonstrating the accuracy of the 3D reconstruction across all systems. (b, d, f, h), 3D motions predicted via the Jacobian Field. We display the motions predicted via the Neural Jacobian Field (solid circle) for various motor commands next to reference motions reconstructed from video streams via point tracking (dotted circle). Reconstructed motions are qualitatively accurate across all robotic systems. Although we manually color-code command channels, our framework associates command channels with 3D motions without supervision.